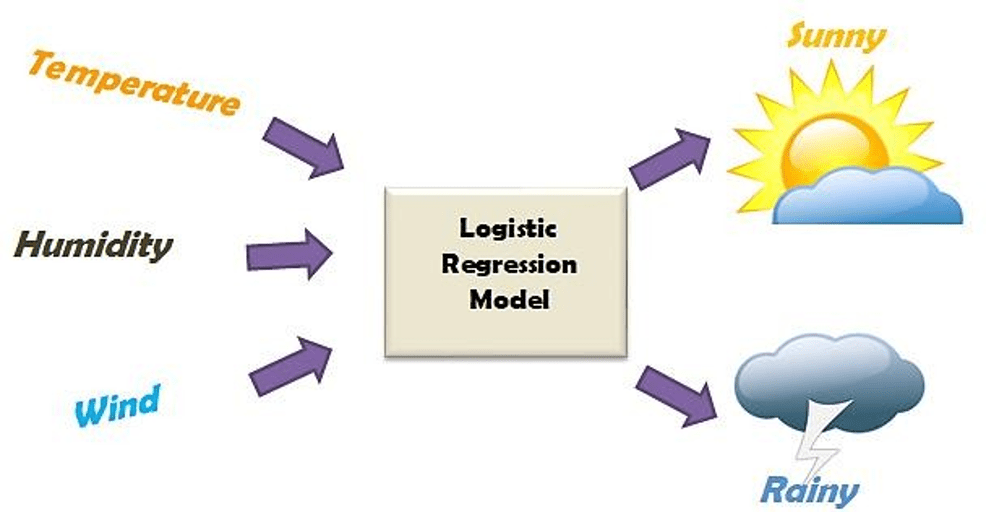

Logistic regression için test verisi olarak kanserli hücrelerin özelliklerini kullanıcaz.

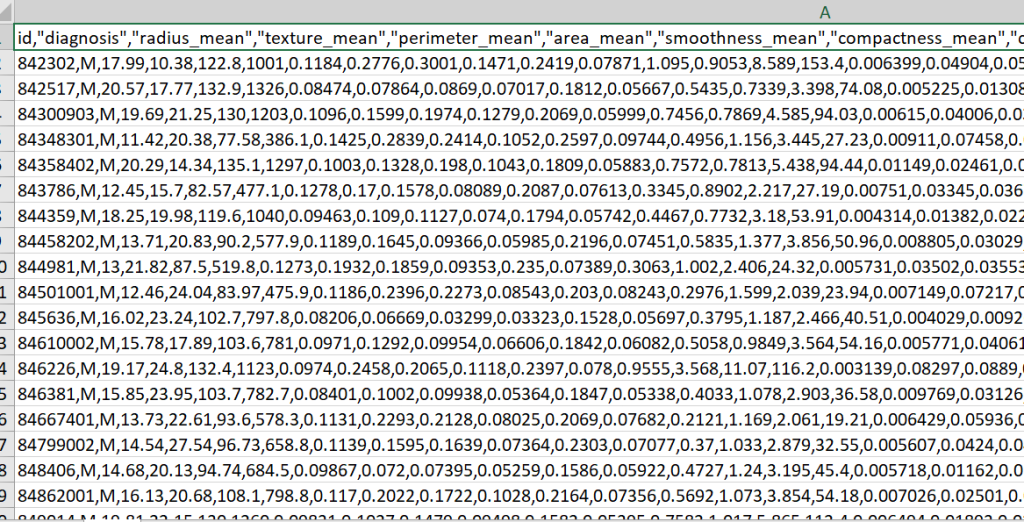

| id,”diagnosis”,”radius_mean”,”texture_mean”,”perimeter_mean”,”area_mean”, “smoothness_mean”,”compactness_mean”,”concavity_mean”,”concave points_mean”,”symmetry_mean”,”fractal_dimension_mean”, “radius_se”,”texture_se”,”perimeter_se”,”area_se”,”smoothness_se”, “compactness_se”,”concavity_se”,”concave alanlarının olduğu bir veri seti alıyoruz. |

# %% libraries

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

# %% read csv

#verinin içinden unnamed alanını kaldırıyoruz çünkü null alanlar modelde olmamalı.

data = pd.read_csv("C:/path/data.csv")

data.drop(["Unnamed: 32","id"],axis=1,inplace = True)

data.diagnosis = [1 if each == "M" else 0 for each in data.diagnosis]

print(data.info())

y = data.diagnosis.values

x_data = data.drop(["diagnosis"],axis=1)

# %% normalization

#bütün değerleri 0 ve 1 arasında bir değere dönüştürür.

x = (x_data - np.min(x_data))/(np.max(x_data)-np.min(x_data)).values

# %% train test split

from sklearn.model_selection import train_test_split

#%20'si test %80 train olarak ayırdık.

x_train, x_test, y_train, y_test = train_test_split(x,y,test_size = 0.2,random_state=42)

x_train = x_train.T

x_test = x_test.T

y_train = y_train.T

y_test = y_test.T

print("x_train: ",x_train.shape)

print("x_test: ",x_test.shape)

print("y_train: ",y_train.shape)

print("y_test: ",y_test.shape)

# %% parameter initialize and sigmoid function

# dimension = 30

# 30 tane future var. Ağırlıkları 0.01 olarak verdik.

def initialize_weights_and_bias(dimension):

w = np.full((dimension,1),0.01)

b = 0.0

return w,b

# w,b = initialize_weights_and_bias(30)

#sigmoid function yazılır

def sigmoid(z):

y_head = 1/(1+ np.exp(-z))

return y_head

# print(sigmoid(0))

def forward_backward_propagation(w,b,x_train,y_train):

# forward propagation

z = np.dot(w.T,x_train) + b

y_head = sigmoid(z)

loss = -y_train*np.log(y_head)-(1-y_train)*np.log(1-y_head)

cost = (np.sum(loss))/x_train.shape[1] # x_train.shape[1] is for scaling

# backward propagation

derivative_weight = (np.dot(x_train,((y_head-y_train).T)))/x_train.shape[1] # x_train.shape[1] is for scaling

derivative_bias = np.sum(y_head-y_train)/x_train.shape[1] # x_train.shape[1] is for scaling

gradients = {"derivative_weight": derivative_weight, "derivative_bias": derivative_bias}

return cost,gradients

#%% Updating(learning) parameters

#update için weight,bias,x_train,y_train,learning weight gerek.

#learning weight kullanarak ne kadar hızlı öğreneceğini belirliyoruz.

#number_of_iterarion : kaç kez işlemin tekrarlanacağını belirleriz.

def update(w, b, x_train, y_train, learning_rate,number_of_iterarion):

cost_list = []

cost_list2 = []

index = []

# updating(learning) parameters is number_of_iterarion times

for i in range(number_of_iterarion):

# make forward and backward propagation and find cost and gradients

cost,gradients = forward_backward_propagation(w,b,x_train,y_train)

cost_list.append(cost)

# lets update

w = w - learning_rate * gradients["derivative_weight"]

b = b - learning_rate * gradients["derivative_bias"]

if i % 10 == 0:

cost_list2.append(cost)

index.append(i)

print ("Cost after iteration %i: %f" %(i, cost))

# we update(learn) parameters weights and bias

parameters = {"weight": w,"bias": b}

plt.plot(index,cost_list2)

plt.xticks(index,rotation='vertical')

plt.xlabel("Number of Iterarion")

plt.ylabel("Cost")

plt.show()

return parameters, gradients, cost_list

#%% # prediction

def predict(w,b,x_test):

# x_test is a input for forward propagation

z = sigmoid(np.dot(w.T,x_test)+b)

Y_prediction = np.zeros((1,x_test.shape[1]))

# if z is bigger than 0.5, our prediction is sign one (y_head=1),

# if z is smaller than 0.5, our prediction is sign zero (y_head=0),

for i in range(z.shape[1]):

if z[0,i]<= 0.5:

Y_prediction[0,i] = 0

else:

Y_prediction[0,i] = 1

return Y_prediction

# %% logistic_regression

def logistic_regression(x_train, y_train, x_test, y_test, learning_rate , num_iterations):

# initialize

dimension = x_train.shape[0] # that is 30

w,b = initialize_weights_and_bias(dimension)

# do not change learning rate

parameters, gradients, cost_list = update(w, b, x_train, y_train, learning_rate,num_iterations)

y_prediction_test = predict(parameters["weight"],parameters["bias"],x_test)

# Print test Errors

print("test accuracy: {} %".format(100 - np.mean(np.abs(y_prediction_test - y_test)) * 100))

logistic_regression(x_train, y_train, x_test, y_test,learning_rate = 1, num_iterations = 300)

Yukarıdaki işlemleri tek bir metod ile yapmanın yöntemi : Logistic regression with sklearn.

#%% sklearn with LR

#yukarıdaki işlemlerin tamamını yapan metod.

from sklearn.linear_model import LogisticRegression

lr = LogisticRegression()

lr.fit(x_train.T,y_train.T)

#lr.score bize test verisinden yüzde kaç doğru olduğu bilgisi verir.

print("test accuracy {}".format(lr.score(x_test.T,y_test.T)))